Implementing Job Exception Retrying in Quartz.NET

This is the second on a three part series on Quartz.NET showing how my team at work implemented a workflow system with Quartz.NET!

This post follows on from my first introductory post introduce Quartz.NET and assumes you have a basic understanding of Quartz.NET. This article also gives a good example of how you can create useful Job Listeners.

Please note: This tutorial has implementations for both Quartz.NET 2.x Quartz.NET 3.x.

Background

Before we begin, I'd like to give a little background to why we are using Quartz.NET to build a simple workflow system. At work, we were presented with a problem. We basically had three data stores that needed to be atomic. A change in one required changes in the other two. It required the following:

- Be able to both chain jobs and run jobs in parallel

- Carry over a payload between jobs

I was given the opportunity to develop a workflow system that would help us achieve these requirements.

The requirements of the fault-tolerant system were as follows:

- Handle exceptions by re-trying jobs that ran into exceptions with increasing time periods between re-tries. This would prevent jobs that run into consistent exceptions from overloading the server given that there would be no delay between re-tries.

- Store jobs that fail a certain number of times into a database for logging purposes.

- Persist, remember and re-start jobs that were interrupted during execution in the unlikely event of server failure.

Implementation

Out of the box, Quartz.NET jobs comes with the ability toggle a field called RequestRecovery. If this field is set to true, Quartz.NET will remember if a job was interrupted halfway through execution due to the server stopping/failing. A scheduler that is restarted with a job interrupted will execute immediately. However, this would only work if Quartz.NET was configured to use a database. This was exactly what we needed to address our 3rd requirement for the system.

However, there was no existing functionality in Quartz.NET to automatically retry jobs that run into exceptions. To get the retrying of jobs working, we utilised the Listeners functionality within Quartz (as explained in the previous blog entry).

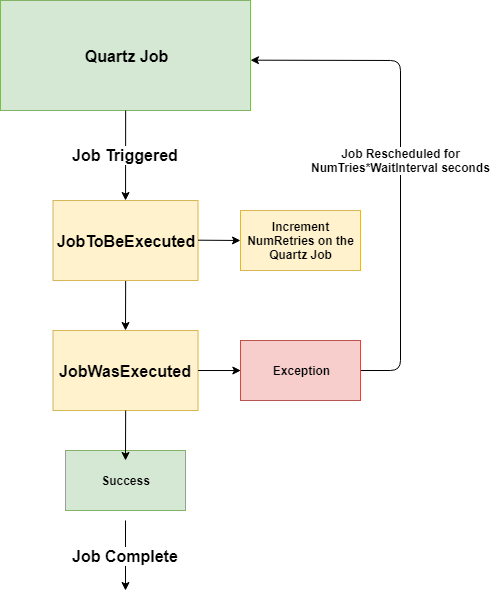

An overview of how we implemented this functionality was to store an integer within each job's JobDataMap. This integer represents how many times the job has re-tried.

We then created a Job Listener. The Job Listener checks whether or not a job finished executing with an exception. If an exception was thrown, the Job Listener would check whether the number of retries is below a specified threshold (for example, retry a job a maximum of 3 times). If not, the number of retries is incremented and the job is rescheduled in an increasing amount of seconds (depending on how many times it's failed).

Let's go ahead and create this Job Listener! We will call it JobExceptionListener. We need to implement our own methods for the job listener by inheriting from IJobListener.

Quartz.NET 2.x Implementation

Quartz.NET 2.x Job Listener documented here. The implementation for Quartz.NET 2.x is as follows:

// Quartz.NET 2.x

public class JobFailureHandler : IJobListener

{

public string Name => "FailJobListener";

public void JobToBeExecuted(IJobExecutionContext context)

{

if (!context.JobDetail.JobDataMap.Contains(Constants.NumTriesKey))

{

context.JobDetail.JobDataMap.Put(Constants.NumTriesKey, 0);

}

var numberTries = context.JobDetail.JobDataMap.GetIntValue(Constants.NumTriesKey);

context.JobDetail.JobDataMap.Put(Constants.NumTriesKey, ++numberTries);

}

public void JobExecutionVetoed(IJobExecutionContext context) { }

public void JobWasExecuted(IJobExecutionContext context, JobExecutionException jobException)

{

if (jobException == null)

{

return;

}

var numTries = context.JobDetail.JobDataMap.GetIntValue(Constants.NumTriesKey);

if (numTries > Constants.MaxRetries)

{

Console.WriteLine($"Job with ID and type: {0}, {1} has run {2} times and has failed each time.",

context.JobDetail.Key, context.JobDetail.JobType, Constants.MaxRetries);

return;

}

var trigger = TriggerBuilder

.Create()

.WithIdentity(Guid.NewGuid().ToString(), Constants.TriggerGroup)

.StartAt(DateTime.Now.AddSeconds(Constants.WaitInterval * numTries))

.Build();

Console.WriteLine($"Job with ID and type: {0}, {1} has thrown the exception: {2}. Running again in {3} seconds.",

context.JobDetail.Key, context.JobDetail.JobType, jobException, Constants.WaitInterval * numTries);

context.Scheduler.RescheduleJob(context.Trigger.Key, trigger);

}

}

Quartz.NET 3.x Implementation

EDIT 4/11/2018: As pointed out by h9k in the comments section, the above works for Quartz.NET 2.x. To get this working for Quartz.NET 3.x, first refer to Quartz.NET 3.x Job Listener documentation. All return types on IJobListener methods now must return Task. The updated version for Quartz.NET 3.x, will look like:

// Quartz.NET 3.x

public class JobFailureHandler : IJobListener

{

public string Name => "FailJobListener";

public Task JobToBeExecuted(IJobExecutionContext context, CancellationToken ct)

{

if (!context.JobDetail.JobDataMap.Contains(Constants.NumTriesKey))

{

context.JobDetail.JobDataMap.Put(Constants.NumTriesKey, 0);

}

var numberTries = context.JobDetail.JobDataMap.GetIntValue(Constants.NumTriesKey);

context.JobDetail.JobDataMap.Put(Constants.NumTriesKey, ++numberTries);

return Task.FromResult<object>(null); // Can be replaced with Task.CompletedTask; if using .NET 4.6

}

public Task JobExecutionVetoed(IJobExecutionContext context, CancellationToken ct)

{

return Task.FromResult<object>(null);

}

public async Task JobWasExecuted(IJobExecutionContext context, JobExecutionException jobException, CancellationToken ct)

{

if (jobException == null)

{

return;

}

var numTries = context.JobDetail.JobDataMap.GetIntValue(Constants.NumTriesKey);

if (numTries > Constants.MaxRetries)

{

Console.WriteLine($"Job with ID and type: {0}, {1} has run {2} times and has failed each time.",

context.JobDetail.Key, context.JobDetail.JobType, Constants.MaxRetries);

return;

}

var trigger = TriggerBuilder

.Create()

.WithIdentity(Guid.NewGuid().ToString(), Constants.TriggerGroup)

.StartAt(DateTime.Now.AddSeconds(Constants.WaitInterval * numTries))

.Build();

Console.WriteLine($"Job with ID and type: {0}, {1} has thrown the exception: {2}. Running again in {3} seconds.",

context.JobDetail.Key, context.JobDetail.JobType, jobException, Constants.WaitInterval * numTries);

await context.Scheduler.RescheduleJob(context.Trigger.Key, trigger);

}

}

Constants

EDIT 28/08/2018: Forgot to add the constants file, here it is:

public class Constants

{

public static readonly int WaitInterval = 5; // Seconds

public static readonly int MaxRetries = 3;

public static readonly string NumTriesKey = "numTriesKey"; // Can be anything

public static readonly string TriggerGroup = "failTriggerGroup"; // Can be anything

}

Persisting Data

EDIT 4/11/2018: As pointed out by h9k, all jobs that we want to run with our JobFailureHandler, we will need to add an additional attribute for it to work properly. For each of our jobs, we will need to add the following attribute: [PersistJobDataAfterExecution]. If we don't add this attribute, jobs will not persist the their JobDataMap (where the failure count is stored) and run an infinite amount of times as the failure count will never increment. This is done like:

[PersistJobDataAfterExecution]

public class ExampleJob: IJob

{

public void Execute(IJobExecutionContext context) { ... }

}

Explanation

So what is our class doing? Well, a few things as you can see - but let's step through it.

JobToBeExecuted

This method is run before a job is executed. Here, we are checking if the JobDataMap has the property: NumTriesKey (We defined this in our Constants.cs file - it's just a string). If it doesn't we add it to the map and set it to 0.

Then every time the job executes, we increment this count allowing us to track how many times the job has been attempted to run.

JobExecutionVetoed

This method is empty, as we don't need to do anything if the job is cancelled.

JobWasExecuted

This method is run after any job has finished executing (either it completed successfully or ran into an exception). We simply want to return and do nothing if there was no exception.

If there is an exception, we want to check if the number of retries (NumTriesKey) stored in the job exceeds the limit we put in the constant: MaxRetries. If it meets or exceeds this limit, we want to stop the retrying and log a message (optional).

If it is under this limit, we create a new trigger that will run at increasing time increments (WaitInterval * numTries). Obviously, you can change the wait duration to suit your needs but I'm simply rescheduling the job to run in (waitInterval * numTries) seconds. Then using this new trigger, we re-schedule the job that failed at this new time period.

Overview

A flow diagram which may help you understand the flow is as follows:

Awesome! We just implemented our own exception tolerant jobs! All we need to do now is attach this to the scheduler we are creating our jobs for and all jobs that run into an exception will try again in a cascading manner.

scheduler.ListenerManager.AddJobListener(myJobListener);

And that's it! Now we have jobs that automatically re-try if they run into an exception. Further additions you can add are if particular exceptions are thrown within a job, do specific things like deleting all the children chained jobs from it.